Adversarial Exposure Validation (AEV): Best Practices and Adoption Roadmap

Cybermindr Insights

Published on: December 12, 2025

Last Updated: February 5, 2026

According to IBM’s Cost

of a Data Breach Report 2025, the global average cost of a data breach this year was $4.4 million.

Phishing accounted for 30% of attacks, underscoring the persistent vulnerability of human error in the

security landscape. Simultaneously, supply chain breaches increased to almost 20% of all incidents this year,

up from 15% in 2024. This highlights the risks that traditional vulnerability management tools cannot address

alone.

This is where Adversarial

Exposure Validation (AEV), a security approach that simulates real-world adversary tactics to validate

whether theoretical exposures can actually be exploited, helps organizations. This article explains what AEV

is and its benefits, while outlining the best practices for seamless integration. Further, it provides a

roadmap for AEV’s adoption, empowering security leaders to turn exposure validation into a competitive edge.

Understanding Adversarial Exposure Validation (AEV): Foundations and Benefits

Gartner’s 2025 Market Guide for Adversarial

Exposure Validation defines AEV as “technologies that deliver consistent, continuous, and automated

evidence of the feasibility of an attack.” Gartner positions Adversarial Exposure Validation (AEV) as an

emerging category within the Continuous Threat Exposure Management (CTEM) framework, emphasizing continuous,

automated evidence of the feasibility of attacks.

These technologies confirm how potential attack

techniques would successfully exploit an organization and circumvent prevention and detection security

controls. They achieve this by performing attack scenarios and modeling or measuring the outcome to prove the

existence and exploitability of exposures.

At its core, AEV bridges the divide between offensive and defensive

security by generating real-time, evidence-based insights into an organization's attack surface. Further, as

advanced threats like artificial intelligence (AI)-fueled attacks proliferate, AEV enables teams to move from

reactive firefighting to proactive fortification. Gartner says that Adversarial Exposure Validation technologies have a few common and

mandatory features.

Common Features of Adversarial Exposure Validation

- Prebuilt attack scenario library - A continuously updated marketplace of prebuilt attack scenarios that can be used for validation

- Customizable dashboards - These allow security teams to align test results with business or operational KPIs.

- Data integration - The ability to seamlessly pull insights from asset discovery, attack surface management, and vulnerability scanners.

- Externally hosted Point of Attack (POA) - Providing the ability to use an externally hosted point of attack (POA) system hosted by the provider through a SaaS model.

- Workflow integrations: The ability to assist in the mobilization of findings through integration with workflow, ticketing, or actual defensive systems. Supports automated ticketing and SIEM/SOAR synchronization for faster response.

- Detection engineering recommendations: Recommendation of vendor-specific detection engineering content on systems based on actual test results that will improve defensive posture.

- Role-based reporting - Detailed reports based on roles. Generates contextual reports for CISOs, red teams, and compliance officers. These reports should include necessary context for each role, such as vendor scorecards, industry peer baselining, or attack path graphics.

Mandatory Features of Adversarial Exposure Validation

- Multi-vector Attack Execution - Performing attack scenarios for multiple threat vectors. Delivered outputs include attack scoring, security-framework-aligned reporting, and prioritized lists of attack scenario findings with estimated impact and suggested remediation actions.

- Empirical Defense Validation - Providing empirical results about an organization’s defensive posture as it relates to various attack techniques and scenarios. The validation results data should significantly improve upon more theoretical data and provide insights into urgently needed changes.

- Scalable Automation - The ability to scale defensive testing with vendor-supplied attack scenarios that need minimal or no hacking knowledge to execute and obtain results data.

- Automated scheduling - Automated scheduling for increased testing frequency without needing human intervention, helping reduce errors and improve trending measurability data for exposure management and defensive operations.

Difference Between AEV and Traditional Vulnerability Management

The key difference between AEV and traditional vulnerability management is

that the latter identifies potential weaknesses, and Breach and Attack simulation (BAS) platforms test the

effectiveness of security controls. However, AEV verifies exploitability and provides a "pass/fail" decision

on whether a threat actor can chain exposures into a breach. AEV closely aligns with the validation and

mobilization stages of the Continuous Threat Exposure

Management (CTEM) process.

Unlike traditional tools, such as vulnerability scanners and BAS

platforms, AEV goes further and focuses on empirical outcomes, proving if a control exists and whether it

works against real threats.

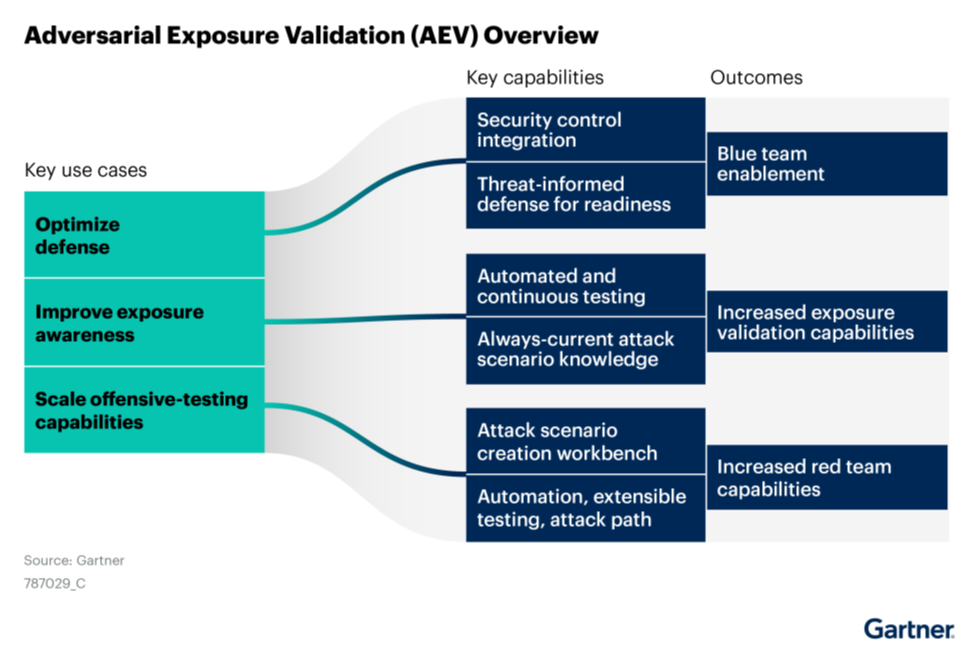

AEV offers several benefits, which include:

- Defensive optimization - AEV helps fine-tune controls,

reducing false positives in alerts

- Improving exposure awareness - By scoring risks based on

the likelihood of exploitation rather than the Common Vulnerability Scoring System (CVSS) scores alone, AEV

eliminates noise.

- Scaling offensive testing capabilities - Security teams can simulate a massive number of scenarios weekly, which is a boon for understaffed security operation centers (SOCs).

AEV has numerous real-world use cases. For example, a company in the financial sector can run AEV against phishing simulations to validate email gateways, catching credential-harvesting attempts. Manufacturing companies can use it to rank supply chain exposures, mitigating breach vectors. Companies leverage AEV-like validation to continuously assess internal and third-party security posture, complementing, but not replacing, security rating platforms.

Best Practices for Adversarial Exposure Validation (AEV) Adoption

AEV adoption is not just about technology; it needs the right execution to maximize ROI. The following are some of the best practices to adopt AEV in your organization.

- Start by defining measurable outcomes aligned to one primary use case. Defensive optimization would be a good starting point if you are unsure where to begin.

- If your organization is resource-constrained, consider using AEV as part of a Penetration Testing as a Service (PTaaS) subscription. Like AEV, PTaaS providers offer a wide range of testing and validation services on a more periodic basis. PTaaS is also attractive for organizations that need frequent AEV but do not have the in-house expertise to use the toolsets. The model provides flexibility and scalability and allows companies to adjust the frequency and scope of testing based on their specific requirements and resources.

- According to Gartner’s 2025 Market Guide, companies have different reasons to allocate budgets to acquire AEV solutions. However, it is important to buy them based on provable justifications. Build a data-driven business case and quantify gains to obtain buy-in for the solution.

- Frequency is key. Consider changing the test frequency from quarterly pen tests to automated, weekly AEV runs. Track trending metrics like control bypass rates to guide remediation.

- Consider open source to get visibility into an AEV solution’s value. If you are unsure if an AEV solution will provide value to your organization, you may be able to gain visibility into use cases and reporting data by using open-source solutions. The outcomes can also help justify a business case to expand AEV through a commercial solution.

- Be aware of the potential challenges. Resource constraint is a challenge that can be solved by considering starting with managed service options. Skill gap is another challenge that can be solved by leveraging vendor training. Tool integration can be a challenge that can be overcome by prioritizing platforms with robust APIs and pre-built integrations.

- Always take care of governance. Establish policies for safe simulation to avoid accidental disruptions.

Roadmap for AEV Implementation

Successful implementation of AEV involves a phase-wise rollout according to

maturity levels rather than a one-shot execution. It is good practice to give sufficient time to implement a

full-scale rollout. The following is a phase-wise implementation of AEV.

Phase 1:

Assessment & Planning

Begin the implementation with a maturity audit. Map your

attack surface using tools like asset inventories and gap analyses and frameworks like MITRE ATT&CK. Next,

define specific outcomes. Issue RFPs focusing on criteria, such as multi-vector support, empirical reporting,

and integrations. Finally, select 2-3 vendors for demo. Make sure you assemble a cross-functional team

involving security, IT, and compliance for this phase.

Phase 2: Pilot & Initial

Deployment

Deploy the solution and resources initially in a sandbox. Run baseline

simulations targeting high-risk vectors, such as credential theft. Measure against defined KPIs. Automate

weekly runs, embedding within CTEM workflows to

validate remediation effectiveness. Refine any hiccups like false positives with the vendor’s

support.

Phase 3: Scaling & Optimization

Extend the implementation

to cover all vectors and assets. Embed adversarial

exposure validation in workflows. This builds confidence and reduces breach costs. You can leverage

AI-assisted analytics to dynamically correlate exposures and prioritize attack paths, as highlighted by

Gartner.

Besides these three phases, it is necessary to monitor and iterate on an ongoing basis.

Quarterly reviews track metrics like exploit success rates. Report the observations to the relevant

stakeholders via dashboards. Adapt to trends. Perform annual vendor audits to ensure alignment with your

organization’s goals.

Adversarial exposure validation marks an evolution in how organizations

perform security testing and defend themselves against cyberthreats. It provides a more precise and actionable

view of risks and shows how cybercriminals could exploit your systems. As it closely aligns with the CTEM

framework, it plays a critical role in providing continuous, scalable validation across all attack surfaces as

organizations adopt CTEM.

From outcome-focused planning and frequent testing to a phased rollout,

the best practices and roadmap described in the article empower teams to validate, prioritize, and fortify

their defenses with precision.

Ready to strengthen your exposure management

program?

Book a CyberMindr demo today.